Performance comparison C++, Numpy and Cupy (CUDA) for differentiation

One of the interesting question, what can performance we can have from using Python in compare with C/C++, and what is the benefit to use CUDA for the same calculations. To answer this question, I do calculate derivatives for different dataset sizes and for different data types. How to calculate derivative, I do explain in this video about derivatives and in this short article

Performance was measured with float32 and fload64 data types with array sizes limited by memory size.

Computer parameters

CPU: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz

Memory: 8GB

GPU: NVIDIA GeForce GTX 1070, 8GB

For GPU code was transferred from NumPy to CUPA library. Performance from the data set was calculated as described in the video about linear regression and in this article

C++ code: This code was performed with float and double arrays

Also, it is nesesary to note, that before main cycle of calculations, some false calculations are performed, to reduce an impact instability with cache and quick memory realocation.

#include

#include

#include

double calc( float *Z, float *Y, double dx, int s)

{

auto fr = std::chrono::high_resolution_clock::now();

for( int k = 1; k < s+1; k++ )

{

Z[k] = (Y[k+1]-Y[k])/dx;

}

auto to = std::chrono::high_resolution_clock::now();

int res = std::chrono::duration_cast(to-fr).count();

return res;

}

int main()

{

long smax = 400000000;

long sz = smax + 2;

double xmin = -10;

double dx = 0.01;

float *X = new float[sz];

float *Y = new float[sz];

float *Z = new float[sz];

for( int i = 0; i < sz; i++ )

{

X[i] = xmin + dx*i;

Y[i] = sin(X[i]);

}

double xy = 0;

double xx = 0;

for( int i = 1000; i > 199; i-- )

{

long s = pow(10,i/100.0);

if( s > smax ) continue;

double res = calc( Z, Y, dx, s);

xy += res * s;

xx += s * s;

printf("%ld - %f\n", s, res);

}

printf("k = %.3e", xy/xx);

} Similar code was written for Python. Which was run with np.float32 and np.float64 for import cupy as np and import numpy as np. To obtain more compatible values, for CUDA, cycle = 20 was used to increase overall time into 20 times.

import cupy as np #import numpy as np

import datetime

def calc(s, cycle):

fr = datetime.datetime.now()

for kk in range(cycle):

Z[1:s] = (Y[2:s+1]-Y[0:s-1])/(2*dx)

to = datetime.datetime.now()

return (to-fr).total_seconds()*1000

s_max = 400_000_000

cycle = 20

dt = np.float32 # np.float64

sz = s_max + 2

xmin = -10.0

dx = 0.01

X = np.linspace(xmin, dx*(sz-1), sz, dtype=dt)

Y = np.sin(X, dtype=dt)

Z = np.zeros_like(X)

x = []

y = []

for ii in range(3):

calc(s_max, cycle)

for i in range(1000, 199, -1):

s = int(10**(i/100))

if s > s_max:

continue

t = calc(s, cycle)

print(s, t)

x.append(s)

y.append(t)

xx = np.array(x)

yy = np.array(y)

print("k = ", (np.sum(xx*yy)/np.sum(xx**2))/cycle)Due to memory size, all calculations were performed with maximal size s_max = 400_000_000 elements for float32 and s_max = 200_000_000 for float64.

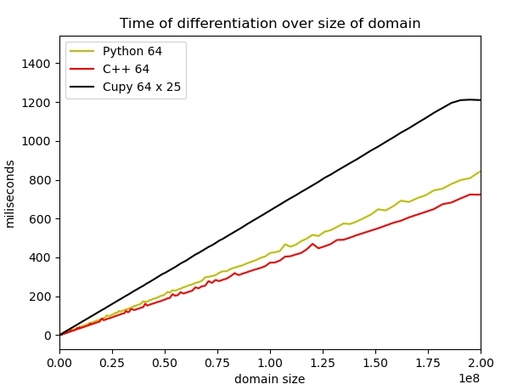

Compare NumPy, CuPy and C++ performance for differentiation if a linear numverical function.

Original image: 603 x 459

The final results of performance given in the table below. Less is faster.

| float32 | float64 | |

| C++ | 3.04x10-6 | 3.77x10-6 |

| NumPy | 1.69x10-6 | 4.28x10-6 |

| CuPy | 1.45x10-7 | 2.06x10-7 |

The same in real mathematical performance (calculated derivatives per second)

| float32 | float64 | |

| C++ | 3.3x108 | 2.7x108 |

| NumPy | 5.9x108 | 2.3x108 |

| CuPy | 6.9x109 | 4.9x109 |

Or, transferred to relative values, with NumPy performance taken as a measure. 32/64 acceleration shows the acceleration of changing from 64 to 32 bytes float.

| float32 | float64 | 32/64 acceleration | |

| C++ | 0.55 | 1.14 | 1.24 |

| NumPy | 1 | 1 | 2.53 |

| CuPy | 11.7 | 20.7 | 1.42 |

Conclusion

If you a happy about memory usage, then CuPy is the best for Float 64 and NumPy is very good at float32. C++ is reasonable for using with float64

It is interesting to note, that on float32, the overall performance of C++ is very low in compare with Python.

One core usage

During this experiment, only one core of CPU was used according to CPU load = 100% dedicated to this script on the TOP monitor.

Published: 2022-07-26 11:45:27

Updated: 2022-07-26 13:43:09